How AI is transforming advertising

Mirriad’s Head Scientist Philip McLauchlan explains how you will soon see an ad for your favorite new product in your favorite old movie.

By Alison Coleman

By Alison Coleman

AI-driven technology enables brands to deliver in-content advertising. Photo source: Mirriad

Imagine you’re watching one of your favorite old movies, Casablanca, on your tablet when Humphrey Bogart’s character Rick pours himself a glass of Dom Pérignon. Meanwhile, your spouse or partner is watching the same film on a different device and sees something entirely different: Ingrid Bergman’s character Ilsa Lund sipping a glass of Baileys on ice.

UK-based startup Mirriad’s AI-driven technology enables brands to deliver innovative product placements, or in-content advertising, a growing industry with global revenues worth $20.57 billion.

Mirriad’s Head Scientist Philip McLauchlan has a background in developing technologies for self-driving cars. In 1999, he founded his first company, Imagineer Systems, which developed algorithms for special effects in Hollywood movies—the technology was used in the 2010 hit Black Swan.

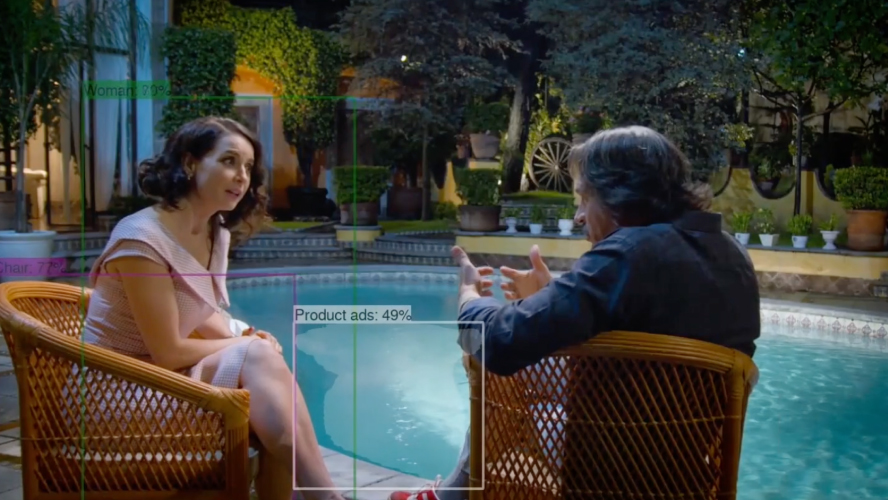

Mirriad’s machine learning algorithms scan films and TV shows looking for potential opportunities for ad placements. They analyze factors like scene setting and character sentiment to determine the context, which in turn helps recommend the most appropriate advertisement.

Mirriad has also signed a deal with management company Red Light Management and record label B-Unique Records to insert ads into music videos.

McLauchlan foresees these kinds of placements to grow from being a niche to being the norm. In a conversation with the Amazon re:MARS team, McLauchlan spoke about the AI-led future of advertising.

What does Mirriad do differently that hasn’t been done in computer vision before?

The goal of Mirriad is to place embeds seamlessly into content, as though they were already there. That’s quite a standard part of the visual effects armory, but we are trying to do it at a much bigger scale. So, the tools we have to create are really designed around automation.

The process starts with getting lots of content and then drilling down into that content to find the exact places where advertisements can be placed. That’s the challenge; given a hundred hours of content, can you find the five minutes in there where your brand will work? This is where machine learning is necessary because there’s no other way to approach that problem.

Our algorithms consider the quantity and the quality of data and determine how ambiguous that data is. There are canonical problems for machine learning. Take a picture of a cat, for example. What are the similarities between pictures of a cat? And then it moves on to, what’s the difference between pictures of a dog? Emotion is, in essence, the same kind of problem. If you look at a scene, is it the case that a person looking at it, or two people looking at the same scene might experience different emotions? If so, it becomes a more difficult problem because you’re not going to get the same response.

The difficult part about emotional understanding is that there will be differences of opinion. I wouldn’t say we’ve created the perfect solution for this, but it’s very important to us that we can extract emotional data and we’re doing the best we can to use emotion, to allow brands to connect to the content. And if they prefer content with a certain emotional response, then this is how we want to achieve that.

We’re looking to leverage the technology that we have, which is very much about very complex visual effects, which we can build as a composite, with all the rendering architecture that we’ve built to make the Mirriad work.

On the design side of it, it’s really about building the complete integrated embed, which could be 2D or 3D, with lots of different effects so that it looks correct in every piece of content, and then putting that into a real-time engine. We’re not talking about banner ads here, we’re talking about something much more dynamic and complex than that, and we’ve taken advantage of what we’ve created in the company.

What are the primary challenges in embedding ads in technology?

The goal is to place embeds seamlessly into content, as if they were already there. You start with the ability to track backgrounds, but then you have to move into other areas; for example, how do you ensure the new embed is properly lit? How do you make it look correct in the background? How do you know which kind of brand will be kept? What can you place in the content?

All of these things are not so obvious, just looking at it from the pure old geometric-style computer vision, which is what I was used to. It’s more about how do you solve problems of scale? And that’s really where the newer methods in machine learning have come to the fore, and that’s why we’ve gone into those in the last five years in a big way. It’s quite a standard part of the visual effects armory, but we are trying to do it at a much bigger scale while using a much smaller team, so we have to create tools that are really designed around automation.

In your opinion, what does the future of TV viewing look like?

The number one thing, ideally, would be no ad breaks. So, we see ourselves as creating a way to avoid ad breaks because brands can get their exposure without interrupting the viewers’ content. It’s a certainty that nobody likes ads.

I think in the US, people are already used to product placement, but in the UK, for example, it’s still quite a new thing. So, content generators need this in order to make some money out of what they’re doing and to allow for advertising in the content. That’s something I’d like to see.

We have technology in development that will create multiple versions of the same ad. There are lots of algorithms you could use or approaches you can take to decide who sees which ad. It’s about targeting and the best approach to targeting.

But the big technological problem for us is how can we get the partnership going where we can stream? Having different versions and being able to stream on each view could mean a different decision as to who sees which ad. This is something we’ve been working on since 2015.

My current project is the live project and finding a way to have more flexibility in terms of what you can place into live content. If the live project takes off, things like the live sports experience, for example, would be very different.

Whenever I explain to people what I do, probably 50% or 60% of them will immediately say, “Oh, that’s like subliminal advertising,” which it isn’t, but the idea is ingrained in people, that you’re fooling the viewer by adding this content, especially in live context. What we’re doing in the end is augmented reality, and I think that’s going to be the world ahead.