Should robots lie?

Roboticist Kantwon Rogers talks about the ethical implications of designing robots that lie to humans in order to produce beneficial outcomes.

Kantwon Rogers, Roboticist

Imagine you want to teach your child to draw. You decide to use an approach that makes your son or daughter feel empowered. After all, research has shown that a “learning by teaching” approach is effective because it compels the person teaching to mentally recall the information previously studied.

You tell your child that you don’t know how to draw. During the classes, you ask your child for guidance on drawing shapes. Your approach is effective. Your child masters drawing basic shapes in half the amount of time than they would have had you adopted a more traditional approach.

Did you lie to the child?

While different philosophical schools of thought would harbor no doubt that you lied, they might offer contrasting opinions on whether your actions were morally questionable. For example, from a Kantian perspective, every human being has an inherent dignity that comes from being able to reason. By telling the child that you don’t know how to draw basic shapes, your actions violated this dignity.

In contrast, a utilitarian ethicist would say that your actions weren’t motivated by a self-serving perspective. On the contrary, they were designed to help the child. As a result, even though you might have lied to the child, your lie was justified.

Now, let’s make matters even more interesting. Imagine it wasn’t a person teaching your child. Imagine that the instructor was instead a robot. Would it be ethical to design this robot that explicitly lies to your child? Would you purchase such a robot, and bring it into your home?

As a roboticist, my work has focused on developing effective human-computer interactions that enable people to interact in ways that produce positive outcomes. In the course of my research, my colleagues and I have designed experiments to understand the practical and ethical implications of designing robots that are explicitly programmed to lie to humans.

To give just one example, a team at my alma mater, Georgia Tech, led by roboticist Dr. Ayanna Howard, developed a virtual reality game called Super Pop to improve the kinetic movements of children diagnosed with cerebral palsy.

Children participating in the experiment had to move their arms to pop bubbles on a computer screen. A robot named Darwin provided continual verbal encouragement during the experiment. The feedback was carefully designed to be positive in order to motivate the child. A post-experiment study found that the positive feedback—that strictly speaking could range from being genuine to an outright deception—delivered by the robot resulted in a tangible improvement in the performance of children.

Deceptions delivered by robots have also been used to influence positive outcomes in adults. For example, robots have been used to restore a loss of motor function in stroke survivors. I read about a particularly interesting experiment in which researchers designed a robotic system to distort the perception of performance of patients undergoing therapy.

The hypothesis was that progress of patients undergoing physical therapy is influenced not only by the goals established with the instructor, but also the patients’ own perception of their progress. To prevent patients from setting goals that were lower than their capabilities, the experimenters implemented a two-step process.

First, the robot displayed a metric that patients could use to measure performance. The robot then deliberately “adjusted” this visual metric, so that patients were forced to take their performance to a higher level. The deliberate distortion worked. At the end of the experiment, the researchers were able to demonstrate that the altered visual feedback was effective in terms of getting patients to increase the amount of force they used to displace objects. They recovered faster.

So far in this article, we have discussed robots utilizing verbal or visual deceptions for highly specialized use cases in areas like physical therapy and education. If you were to go by the examples cited above, you might think that the use of deception in robots has been limited to esoteric areas with the express purpose of deriving largely positive outcomes, and from a utilitarian perspective, there is no explicit harm in designing robots that lie.

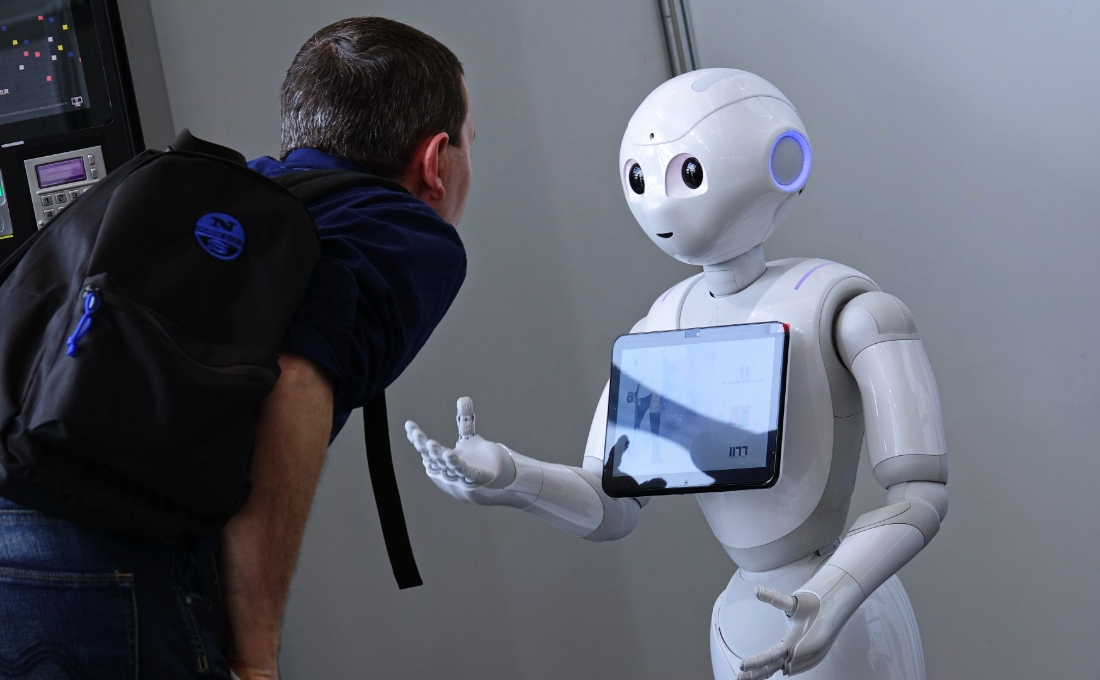

However, it bears noting that far from being an outlier, deception is the norm when it comes to robots. To give just one example, most robots we encounter have deception encoded in how they look. For example, robots like Softbank’s Pepper have been carefully designed to look like humans. These carefully selected design choices behind Pepper’s appearance are no accident: people place a greater degree of trust in robots that look like humans.

Results of a 2021 experiment developed by Dr. Ayanna Howard and myself, “Looks can permit deceiving” showed that people are more likely to choose to reward a physically embodied intelligent agent over a virtual one, irrespective of whether the agent has been deceptive or honest. This trust extended even to situations where the agent’s deception resulted in the individual losing money.

A startling example of humans trusting robots with a physical embodiment was evident in another experiment we conducted at Georgia Tech. The team performed an experiment where participants interacted with a robot, which was programmed to deliberately give wrong directions to the participants. Researchers then simulated an emergency by utilizing smoke and fire alarms to create the illusion of a fire. Surprisingly, every one of the participants in the experiment followed the instructions the robot gave to evacuate the building, despite having seen the same robot perform miserably at a navigation task only minutes before.

This experiment and many others find that humans tend to over-trust robots. However, there are nuances to this finding. The results of more recent experiments conducted at my lab present an interesting paradox. These experiments show that when an artificial intelligence agent lies to a participant—even when the participant benefits from this deception—the participant then believes that the agent will act maliciously in a different scenario. To put it simply, there is a danger that humans will stop trusting robots after being exposed to deceptions.

Should we then get rid of deception when it comes to designing robots altogether? Given that there are clear benefits to utilizing deception to produce a positive outcome—think of improving the range of motion of children with cerebral palsy—it would be unethical to exclusively design robots that never lie. However, it doesn’t take a huge mental leap to recognize how robot designers might use this justification for deception to design robots that are used to achieve malicious purposes, and ultimately end up causing harm to humans.

This is why it’s crucial that we operate within the framework of effective regulations from the government or a third-party body. However, as we’ve seen with many aspects of new technology from social media to facial recognition, the law frequently moves slower than technology. We can’t just wait for the government to issue effective regulations. Members of organizations like the IEEE have begun: a recent document on ethically aligned design seeks to spark a discussion on aligning the creation of artificial intelligence design with values and ethical principles that result in the maximization of human well-being.

In this era of “The Fourth Industrial Revolution,” members of academia, industry, think tanks, and the government need to actively talk and listen to each other, and start on work that informs future policy decisions. Robots have the potential to bring about meaningful and positive changes to our lives. We should do everything we can to develop regulations that allow every one of us to develop trusting relationships with robots similar to those we enjoy with other humans.

You tell your child that you don’t know how to draw. During the classes, you ask your child for guidance on drawing shapes. Your approach is effective. Your child masters drawing basic shapes in half the amount of time than they would have had you adopted a more traditional approach.

Did you lie to the child?

While different philosophical schools of thought would harbor no doubt that you lied, they might offer contrasting opinions on whether your actions were morally questionable. For example, from a Kantian perspective, every human being has an inherent dignity that comes from being able to reason. By telling the child that you don’t know how to draw basic shapes, your actions violated this dignity.

In contrast, a utilitarian ethicist would say that your actions weren’t motivated by a self-serving perspective. On the contrary, they were designed to help the child. As a result, even though you might have lied to the child, your lie was justified.

Now, let’s make matters even more interesting. Imagine it wasn’t a person teaching your child. Imagine that the instructor was instead a robot. Would it be ethical to design this robot that explicitly lies to your child? Would you purchase such a robot, and bring it into your home?

As a roboticist, my work has focused on developing effective human-computer interactions that enable people to interact in ways that produce positive outcomes. In the course of my research, my colleagues and I have designed experiments to understand the practical and ethical implications of designing robots that are explicitly programmed to lie to humans.

To give just one example, a team at my alma mater, Georgia Tech, led by roboticist Dr. Ayanna Howard, developed a virtual reality game called Super Pop to improve the kinetic movements of children diagnosed with cerebral palsy.

Children participating in the experiment had to move their arms to pop bubbles on a computer screen. A robot named Darwin provided continual verbal encouragement during the experiment. The feedback was carefully designed to be positive in order to motivate the child. A post-experiment study found that the positive feedback—that strictly speaking could range from being genuine to an outright deception—delivered by the robot resulted in a tangible improvement in the performance of children.

Deceptions delivered by robots have also been used to influence positive outcomes in adults. For example, robots have been used to restore a loss of motor function in stroke survivors. I read about a particularly interesting experiment in which researchers designed a robotic system to distort the perception of performance of patients undergoing therapy.

The hypothesis was that progress of patients undergoing physical therapy is influenced not only by the goals established with the instructor, but also the patients’ own perception of their progress. To prevent patients from setting goals that were lower than their capabilities, the experimenters implemented a two-step process.

First, the robot displayed a metric that patients could use to measure performance. The robot then deliberately “adjusted” this visual metric, so that patients were forced to take their performance to a higher level. The deliberate distortion worked. At the end of the experiment, the researchers were able to demonstrate that the altered visual feedback was effective in terms of getting patients to increase the amount of force they used to displace objects. They recovered faster.

So far in this article, we have discussed robots utilizing verbal or visual deceptions for highly specialized use cases in areas like physical therapy and education. If you were to go by the examples cited above, you might think that the use of deception in robots has been limited to esoteric areas with the express purpose of deriving largely positive outcomes, and from a utilitarian perspective, there is no explicit harm in designing robots that lie.

However, it bears noting that far from being an outlier, deception is the norm when it comes to robots. To give just one example, most robots we encounter have deception encoded in how they look. For example, robots like Softbank’s Pepper have been carefully designed to look like humans. These carefully selected design choices behind Pepper’s appearance are no accident: people place a greater degree of trust in robots that look like humans.

Results of a 2021 experiment developed by Dr. Ayanna Howard and myself, “Looks can permit deceiving” showed that people are more likely to choose to reward a physically embodied intelligent agent over a virtual one, irrespective of whether the agent has been deceptive or honest. This trust extended even to situations where the agent’s deception resulted in the individual losing money.

A startling example of humans trusting robots with a physical embodiment was evident in another experiment we conducted at Georgia Tech. The team performed an experiment where participants interacted with a robot, which was programmed to deliberately give wrong directions to the participants. Researchers then simulated an emergency by utilizing smoke and fire alarms to create the illusion of a fire. Surprisingly, every one of the participants in the experiment followed the instructions the robot gave to evacuate the building, despite having seen the same robot perform miserably at a navigation task only minutes before.

This experiment and many others find that humans tend to over-trust robots. However, there are nuances to this finding. The results of more recent experiments conducted at my lab present an interesting paradox. These experiments show that when an artificial intelligence agent lies to a participant—even when the participant benefits from this deception—the participant then believes that the agent will act maliciously in a different scenario. To put it simply, there is a danger that humans will stop trusting robots after being exposed to deceptions.

Should we then get rid of deception when it comes to designing robots altogether? Given that there are clear benefits to utilizing deception to produce a positive outcome—think of improving the range of motion of children with cerebral palsy—it would be unethical to exclusively design robots that never lie. However, it doesn’t take a huge mental leap to recognize how robot designers might use this justification for deception to design robots that are used to achieve malicious purposes, and ultimately end up causing harm to humans.

This is why it’s crucial that we operate within the framework of effective regulations from the government or a third-party body. However, as we’ve seen with many aspects of new technology from social media to facial recognition, the law frequently moves slower than technology. We can’t just wait for the government to issue effective regulations. Members of organizations like the IEEE have begun: a recent document on ethically aligned design seeks to spark a discussion on aligning the creation of artificial intelligence design with values and ethical principles that result in the maximization of human well-being.

In this era of “The Fourth Industrial Revolution,” members of academia, industry, think tanks, and the government need to actively talk and listen to each other, and start on work that informs future policy decisions. Robots have the potential to bring about meaningful and positive changes to our lives. We should do everything we can to develop regulations that allow every one of us to develop trusting relationships with robots similar to those we enjoy with other humans.